Add rest-api example (#889)

This commit is contained in:

1

.gitignore

vendored

1

.gitignore

vendored

@@ -76,7 +76,6 @@ docs/_build/

|

||||

target/

|

||||

|

||||

# Jupyter Notebook

|

||||

*.yaml

|

||||

|

||||

# IPython

|

||||

profile_default/

|

||||

|

||||

@@ -26,6 +26,14 @@ Book a [1-on-1 Session](https://cal.com/taranjeetio/ec) with Taranjeet, the foun

|

||||

pip install --upgrade embedchain

|

||||

```

|

||||

|

||||

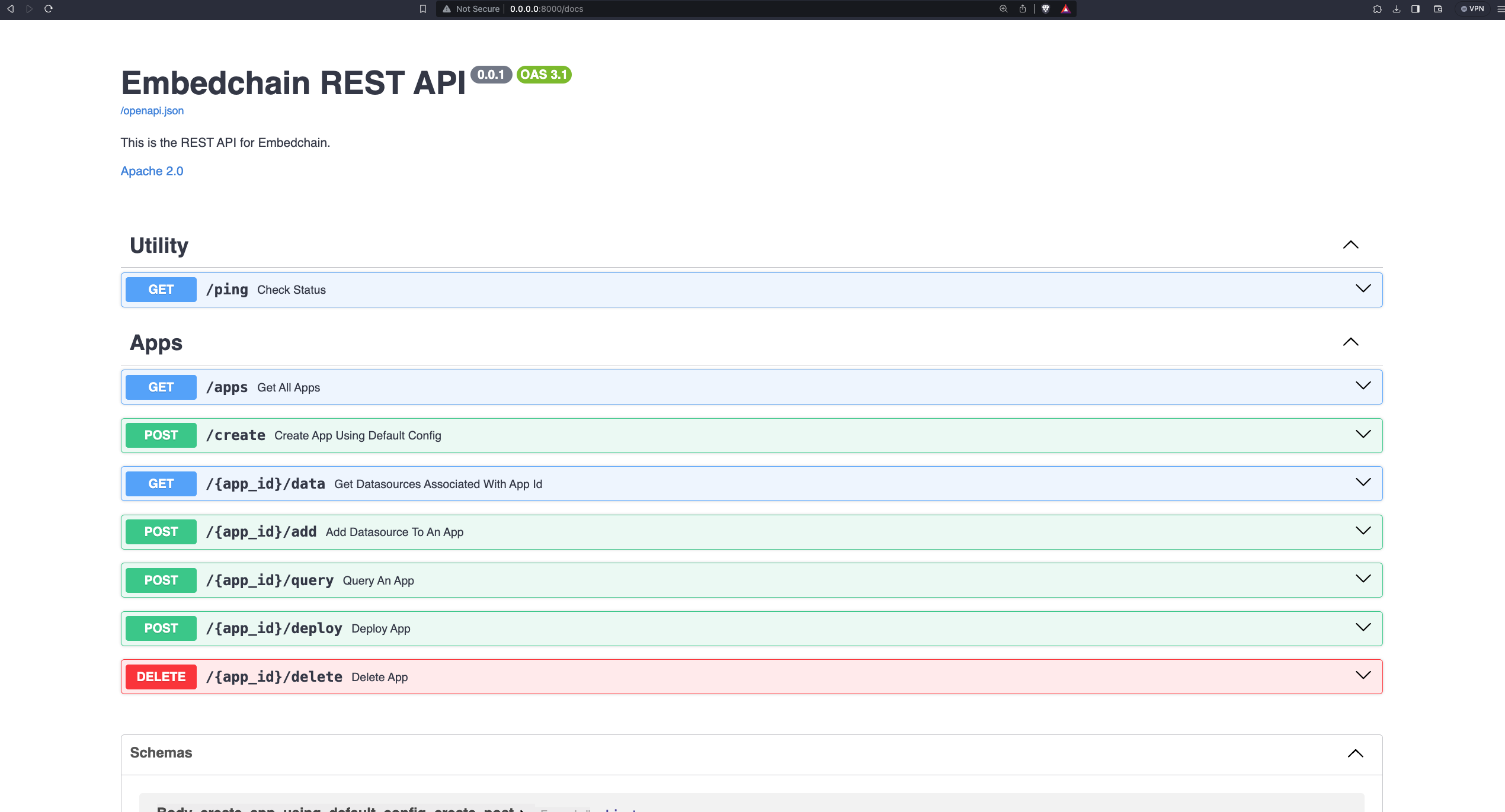

To run Embedchain as a REST API server use,

|

||||

|

||||

```bash

|

||||

docker run -d --name embedchain -p 8000:8000 embedchain/app:rest-api-latest

|

||||

```

|

||||

|

||||

Navigate to http://0.0.0.0:8000/docs to interact with the API.

|

||||

|

||||

## 🔍 Demo

|

||||

|

||||

Try out embedchain in your browser:

|

||||

|

||||

@@ -1,7 +1,7 @@

|

||||

llm:

|

||||

provider: anthropic

|

||||

model: 'claude-instant-1'

|

||||

config:

|

||||

model: 'claude-instant-1'

|

||||

temperature: 0.5

|

||||

max_tokens: 1000

|

||||

top_p: 1

|

||||

|

||||

@@ -4,8 +4,8 @@ app:

|

||||

|

||||

llm:

|

||||

provider: azure_openai

|

||||

model: gpt-35-turbo

|

||||

config:

|

||||

model: gpt-35-turbo

|

||||

deployment_name: your_llm_deployment_name

|

||||

temperature: 0.5

|

||||

max_tokens: 1000

|

||||

|

||||

@@ -5,8 +5,8 @@ app:

|

||||

|

||||

llm:

|

||||

provider: openai

|

||||

model: 'gpt-3.5-turbo'

|

||||

config:

|

||||

model: 'gpt-3.5-turbo'

|

||||

temperature: 0.5

|

||||

max_tokens: 1000

|

||||

top_p: 1

|

||||

|

||||

@@ -1,7 +1,7 @@

|

||||

llm:

|

||||

provider: cohere

|

||||

model: large

|

||||

config:

|

||||

model: large

|

||||

temperature: 0.5

|

||||

max_tokens: 1000

|

||||

top_p: 1

|

||||

|

||||

@@ -4,8 +4,8 @@ app:

|

||||

|

||||

llm:

|

||||

provider: openai

|

||||

model: 'gpt-3.5-turbo'

|

||||

config:

|

||||

model: 'gpt-3.5-turbo'

|

||||

temperature: 0.5

|

||||

max_tokens: 1000

|

||||

top_p: 1

|

||||

|

||||

@@ -1,7 +1,7 @@

|

||||

llm:

|

||||

provider: huggingface

|

||||

model: 'google/flan-t5-xxl'

|

||||

config:

|

||||

model: 'google/flan-t5-xxl'

|

||||

temperature: 0.5

|

||||

max_tokens: 1000

|

||||

top_p: 0.5

|

||||

|

||||

@@ -1,7 +1,7 @@

|

||||

llm:

|

||||

provider: llama2

|

||||

model: 'a16z-infra/llama13b-v2-chat:df7690f1994d94e96ad9d568eac121aecf50684a0b0963b25a41cc40061269e5'

|

||||

config:

|

||||

model: 'a16z-infra/llama13b-v2-chat:df7690f1994d94e96ad9d568eac121aecf50684a0b0963b25a41cc40061269e5'

|

||||

temperature: 0.5

|

||||

max_tokens: 1000

|

||||

top_p: 0.5

|

||||

|

||||

@@ -7,8 +7,8 @@ app:

|

||||

|

||||

llm:

|

||||

provider: openai

|

||||

model: 'gpt-3.5-turbo'

|

||||

config:

|

||||

model: 'gpt-3.5-turbo'

|

||||

temperature: 0.5

|

||||

max_tokens: 1000

|

||||

top_p: 1

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

llm:

|

||||

provider: vertexai

|

||||

model: 'chat-bison'

|

||||

config:

|

||||

model: 'chat-bison'

|

||||

temperature: 0.5

|

||||

top_p: 0.5

|

||||

|

||||

3

docs/api-reference/add-datasource-to-an-app.mdx

Normal file

3

docs/api-reference/add-datasource-to-an-app.mdx

Normal file

@@ -0,0 +1,3 @@

|

||||

---

|

||||

openapi: post /{app_id}/add

|

||||

---

|

||||

3

docs/api-reference/chat-with-an-app.mdx

Normal file

3

docs/api-reference/chat-with-an-app.mdx

Normal file

@@ -0,0 +1,3 @@

|

||||

---

|

||||

openapi: post /{app_id}/chat

|

||||

---

|

||||

3

docs/api-reference/check-status.mdx

Normal file

3

docs/api-reference/check-status.mdx

Normal file

@@ -0,0 +1,3 @@

|

||||

---

|

||||

openapi: get /ping

|

||||

---

|

||||

3

docs/api-reference/create-app.mdx

Normal file

3

docs/api-reference/create-app.mdx

Normal file

@@ -0,0 +1,3 @@

|

||||

---

|

||||

openapi: post /create

|

||||

---

|

||||

3

docs/api-reference/delete-app.mdx

Normal file

3

docs/api-reference/delete-app.mdx

Normal file

@@ -0,0 +1,3 @@

|

||||

---

|

||||

openapi: delete /{app_id}/delete

|

||||

---

|

||||

3

docs/api-reference/deploy-app.mdx

Normal file

3

docs/api-reference/deploy-app.mdx

Normal file

@@ -0,0 +1,3 @@

|

||||

---

|

||||

openapi: post /{app_id}/deploy

|

||||

---

|

||||

3

docs/api-reference/get-all-apps.mdx

Normal file

3

docs/api-reference/get-all-apps.mdx

Normal file

@@ -0,0 +1,3 @@

|

||||

---

|

||||

openapi: get /apps

|

||||

---

|

||||

@@ -0,0 +1,3 @@

|

||||

---

|

||||

openapi: get /{app_id}/data

|

||||

---

|

||||

102

docs/api-reference/getting-started.mdx

Normal file

102

docs/api-reference/getting-started.mdx

Normal file

@@ -0,0 +1,102 @@

|

||||

---

|

||||

title: "🌍 Getting Started"

|

||||

---

|

||||

|

||||

## Quick Start

|

||||

|

||||

To run Embedchain as a REST API server use,

|

||||

|

||||

```bash

|

||||

docker run -d --name embedchain -p 8000:8000 embedchain/app:rest-api-latest

|

||||

```

|

||||

|

||||

Open up your browser and navigate to http://0.0.0.0:8000/docs to interact with the API. There is a full-fledged Swagger docs playground with all the information

|

||||

about the API endpoints.

|

||||

|

||||

|

||||

|

||||

## Creating your first App

|

||||

|

||||

App requires an `app_id` to be created. The `app_id` is a unique identifier for your app.

|

||||

|

||||

By default we will use the opensource **gpt4all** model to perform operations. You can also specify your own config by uploading a config YAML file.

|

||||

|

||||

For example, create a `config.yaml` file (adjust according to your requirements):

|

||||

|

||||

```yaml

|

||||

app:

|

||||

config:

|

||||

id: "default-app"

|

||||

|

||||

llm:

|

||||

provider: openai

|

||||

config:

|

||||

model: "gpt-3.5-turbo"

|

||||

temperature: 0.5

|

||||

max_tokens: 1000

|

||||

top_p: 1

|

||||

stream: false

|

||||

template: |

|

||||

Use the following pieces of context to answer the query at the end.

|

||||

If you don't know the answer, just say that you don't know, don't try to make up an answer.

|

||||

|

||||

$context

|

||||

|

||||

Query: $query

|

||||

|

||||

Helpful Answer:

|

||||

|

||||

vectordb:

|

||||

provider: chroma

|

||||

config:

|

||||

collection_name: "rest-api-app"

|

||||

dir: db

|

||||

allow_reset: true

|

||||

|

||||

embedder:

|

||||

provider: openai

|

||||

config:

|

||||

model: "text-embedding-ada-002"

|

||||

```

|

||||

|

||||

To learn more about custom configurations, check out the [Custom configurations](https://docs.embedchain.ai/advanced/configuration).

|

||||

To explore more examples of config YAMLs for Embedchain, visit [embedchain/configs](https://github.com/embedchain/embedchain/tree/main/configs).

|

||||

|

||||

Now, you can upload this config file in the request body.

|

||||

|

||||

**Note:** To use custom models, an **API key** might be required. Refer to the table below to determine the necessary API key for your provider.

|

||||

|

||||

| Keys | Providers |

|

||||

| -------------------------- | ------------------------------ |

|

||||

| `OPENAI_API_KEY ` | OpenAI, Azure OpenAI, Jina etc |

|

||||

| `OPENAI_API_TYPE` | Azure OpenAI |

|

||||

| `OPENAI_API_BASE` | Azure OpenAI |

|

||||

| `OPENAI_API_VERSION` | Azure OpenAI |

|

||||

| `COHERE_API_KEY` | Cohere |

|

||||

| `ANTHROPIC_API_KEY` | Anthropic |

|

||||

| `JINACHAT_API_KEY` | Jina |

|

||||

| `HUGGINGFACE_ACCESS_TOKEN` | Huggingface |

|

||||

| `REPLICATE_API_TOKEN` | LLAMA2 |

|

||||

|

||||

To provide them, you can simply run the docker command with the `-e` flag.

|

||||

|

||||

For example,

|

||||

|

||||

```bash

|

||||

docker run -d --name embedchain -p 8000:8000 -e OPENAI_API_KEY=YOUR_API_KEY embedchain/app:rest-api-latest

|

||||

```

|

||||

|

||||

Cool! This will create a new Embedchain App with the given `app_id`.

|

||||

|

||||

## Deploying your App to Embedchain Platform

|

||||

|

||||

This feature is very powerful as it allows the creation of a public API endpoint for your app, enabling queries from anywhere. This creates a _pipeline_

|

||||

for your app that can sync the data time to time and provide you with the best results.

|

||||

|

||||

|

||||

|

||||

To utilize this functionality, visit app.embedchain.ai and create an account. Subsequently, generate a new [API KEY](https://app.embedchain.ai/settings/keys/).

|

||||

|

||||

|

||||

|

||||

Using this API key, you can deploy your app to the platform.

|

||||

3

docs/api-reference/query-an-app.mdx

Normal file

3

docs/api-reference/query-an-app.mdx

Normal file

@@ -0,0 +1,3 @@

|

||||

---

|

||||

openapi: post /{app_id}/query

|

||||

---

|

||||

@@ -1,93 +0,0 @@

|

||||

---

|

||||

title: '🌍 API Server'

|

||||

---

|

||||

|

||||

The API server example can be found [here](https://github.com/embedchain/embedchain/tree/main/examples/api_server).

|

||||

|

||||

It is a Flask based server that integrates the `embedchain` package, offering endpoints to add, query, and chat to engage in conversations with a chatbot using JSON requests.

|

||||

|

||||

### 🐳 Docker Setup

|

||||

|

||||

- Open variables.env, and edit it to add your 🔑 `OPENAI_API_KEY`.

|

||||

- To setup your api server using docker, run the following command inside this folder using your terminal.

|

||||

|

||||

```bash

|

||||

docker-compose up --build

|

||||

```

|

||||

|

||||

📝 Note: The build command might take a while to install all the packages depending on your system resources.

|

||||

|

||||

### 🚀 Usage Instructions

|

||||

|

||||

- Your api server is running on [http://localhost:5000/](http://localhost:5000/)

|

||||

- To use the api server, make an api call to the endpoints `/add`, `/query` and `/chat` using the json formats discussed below.

|

||||

- To add data sources to the bot (/add):

|

||||

```json

|

||||

// Request

|

||||

{

|

||||

"data_type": "your_data_type_here",

|

||||

"url_or_text": "your_url_or_text_here"

|

||||

}

|

||||

|

||||

// Response

|

||||

{

|

||||

"data": "Added data_type: url_or_text"

|

||||

}

|

||||

```

|

||||

- To ask queries from the bot (/query):

|

||||

```json

|

||||

// Request

|

||||

{

|

||||

"question": "your_question_here"

|

||||

}

|

||||

|

||||

// Response

|

||||

{

|

||||

"data": "your_answer_here"

|

||||

}

|

||||

```

|

||||

- To chat with the bot (/chat):

|

||||

```json

|

||||

// Request

|

||||

{

|

||||

"question": "your_question_here"

|

||||

}

|

||||

|

||||

// Response

|

||||

{

|

||||

"data": "your_answer_here"

|

||||

}

|

||||

```

|

||||

|

||||

### 📡 Curl Call Formats

|

||||

|

||||

- To add data sources to the bot (/add):

|

||||

```bash

|

||||

curl -X POST \

|

||||

-H "Content-Type: application/json" \

|

||||

-d '{

|

||||

"data_type": "your_data_type_here",

|

||||

"url_or_text": "your_url_or_text_here"

|

||||

}' \

|

||||

http://localhost:5000/add

|

||||

```

|

||||

- To ask queries from the bot (/query):

|

||||

```bash

|

||||

curl -X POST \

|

||||

-H "Content-Type: application/json" \

|

||||

-d '{

|

||||

"question": "your_question_here"

|

||||

}' \

|

||||

http://localhost:5000/query

|

||||

```

|

||||

- To chat with the bot (/chat):

|

||||

```bash

|

||||

curl -X POST \

|

||||

-H "Content-Type: application/json" \

|

||||

-d '{

|

||||

"question": "your_question_here"

|

||||

}' \

|

||||

http://localhost:5000/chat

|

||||

```

|

||||

|

||||

🎉 Happy Chatting! 🎉

|

||||

@@ -14,6 +14,7 @@

|

||||

"dark": "#020415"

|

||||

}

|

||||

},

|

||||

"openapi": ["/rest-api.json"],

|

||||

"metadata": {

|

||||

"og:image": "/images/og.png",

|

||||

"twitter:site": "@embedchain"

|

||||

@@ -28,8 +29,8 @@

|

||||

"url": "https://twitter.com/embedchain"

|

||||

},

|

||||

{

|

||||

"name":"Slack",

|

||||

"url":"https://join.slack.com/t/embedchain/shared_invite/zt-22uwz3c46-Zg7cIh5rOBteT_xe1jwLDw"

|

||||

"name": "Slack",

|

||||

"url": "https://join.slack.com/t/embedchain/shared_invite/zt-22uwz3c46-Zg7cIh5rOBteT_xe1jwLDw"

|

||||

},

|

||||

{

|

||||

"name": "Discord",

|

||||

@@ -46,11 +47,20 @@

|

||||

"navigation": [

|

||||

{

|

||||

"group": "Get started",

|

||||

"pages": ["get-started/quickstart", "get-started/introduction", "get-started/faq", "get-started/examples"]

|

||||

"pages": [

|

||||

"get-started/quickstart",

|

||||

"get-started/introduction",

|

||||

"get-started/faq",

|

||||

"get-started/examples"

|

||||

]

|

||||

},

|

||||

{

|

||||

"group": "Components",

|

||||

"pages": ["components/llms", "components/embedding-models", "components/vector-databases"]

|

||||

"pages": [

|

||||

"components/llms",

|

||||

"components/embedding-models",

|

||||

"components/vector-databases"

|

||||

]

|

||||

},

|

||||

{

|

||||

"group": "Data sources",

|

||||

@@ -81,16 +91,34 @@

|

||||

"group": "Advanced",

|

||||

"pages": ["advanced/configuration"]

|

||||

},

|

||||

{

|

||||

"group": "Rest API",

|

||||

"pages": [

|

||||

"api-reference/getting-started",

|

||||

"api-reference/check-status",

|

||||

"api-reference/get-all-apps",

|

||||

"api-reference/create-app",

|

||||

"api-reference/query-an-app",

|

||||

"api-reference/add-datasource-to-an-app",

|

||||

"api-reference/get-datasources-associated-with-app-id",

|

||||

"api-reference/deploy-app",

|

||||

"api-reference/delete-app"

|

||||

]

|

||||

},

|

||||

{

|

||||

"group": "Examples",

|

||||

"pages": ["examples/full_stack", "examples/api_server", "examples/discord_bot", "examples/slack_bot", "examples/telegram_bot", "examples/whatsapp_bot", "examples/poe_bot"]

|

||||

"pages": [

|

||||

"examples/full_stack",

|

||||

"examples/discord_bot",

|

||||

"examples/slack_bot",

|

||||

"examples/telegram_bot",

|

||||

"examples/whatsapp_bot",

|

||||

"examples/poe_bot"

|

||||

]

|

||||

},

|

||||

{

|

||||

"group": "Community",

|

||||

"pages": [

|

||||

"community/connect-with-us",

|

||||

"community/showcase"

|

||||

]

|

||||

"pages": ["community/connect-with-us", "community/showcase"]

|

||||

},

|

||||

{

|

||||

"group": "Integrations",

|

||||

@@ -108,16 +136,13 @@

|

||||

},

|

||||

{

|

||||

"group": "Product",

|

||||

"pages": [

|

||||

"product/release-notes"

|

||||

]

|

||||

"pages": ["product/release-notes"]

|

||||

}

|

||||

|

||||

],

|

||||

"footerSocials": {

|

||||

"website": "https://embedchain.ai",

|

||||

"github": "https://github.com/embedchain/embedchain",

|

||||

"slack":"https://join.slack.com/t/embedchain/shared_invite/zt-22uwz3c46-Zg7cIh5rOBteT_xe1jwLDw",

|

||||

"slack": "https://join.slack.com/t/embedchain/shared_invite/zt-22uwz3c46-Zg7cIh5rOBteT_xe1jwLDw",

|

||||

"discord": "https://discord.gg/6PzXDgEjG5",

|

||||

"twitter": "https://twitter.com/embedchain",

|

||||

"linkedin": "https://www.linkedin.com/company/embedchain"

|

||||

|

||||

428

docs/rest-api.json

Normal file

428

docs/rest-api.json

Normal file

@@ -0,0 +1,428 @@

|

||||

{

|

||||

"openapi": "3.1.0",

|

||||

"info": {

|

||||

"title": "Embedchain REST API",

|

||||

"description": "This is the REST API for Embedchain.",

|

||||

"license": {

|

||||

"name": "Apache 2.0",

|

||||

"url": "https://github.com/embedchain/embedchain/blob/main/LICENSE"

|

||||

},

|

||||

"version": "0.0.1"

|

||||

},

|

||||

"paths": {

|

||||

"/ping": {

|

||||

"get": {

|

||||

"tags": ["Utility"],

|

||||

"summary": "Check Status",

|

||||

"description": "Endpoint to check the status of the API.",

|

||||

"operationId": "check_status_ping_get",

|

||||

"responses": {

|

||||

"200": {

|

||||

"description": "Successful Response",

|

||||

"content": { "application/json": { "schema": {} } }

|

||||

}

|

||||

}

|

||||

}

|

||||

},

|

||||

"/apps": {

|

||||

"get": {

|

||||

"tags": ["Apps"],

|

||||

"summary": "Get All Apps",

|

||||

"description": "Get all apps.",

|

||||

"operationId": "get_all_apps_apps_get",

|

||||

"responses": {

|

||||

"200": {

|

||||

"description": "Successful Response",

|

||||

"content": { "application/json": { "schema": {} } }

|

||||

}

|

||||

}

|

||||

}

|

||||

},

|

||||

"/create": {

|

||||

"post": {

|

||||

"tags": ["Apps"],

|

||||

"summary": "Create App",

|

||||

"description": "Create a new app using App ID.",

|

||||

"operationId": "create_app_using_default_config_create_post",

|

||||

"parameters": [

|

||||

{

|

||||

"name": "app_id",

|

||||

"in": "query",

|

||||

"required": true,

|

||||

"schema": { "type": "string", "title": "App Id" }

|

||||

}

|

||||

],

|

||||

"requestBody": {

|

||||

"content": {

|

||||

"multipart/form-data": {

|

||||

"schema": {

|

||||

"allOf": [

|

||||

{

|

||||

"$ref": "#/components/schemas/Body_create_app_using_default_config_create_post"

|

||||

}

|

||||

],

|

||||

"title": "Body"

|

||||

}

|

||||

}

|

||||

}

|

||||

},

|

||||

"responses": {

|

||||

"200": {

|

||||

"description": "Successful Response",

|

||||

"content": {

|

||||

"application/json": {

|

||||

"schema": { "$ref": "#/components/schemas/DefaultResponse" }

|

||||

}

|

||||

}

|

||||

},

|

||||

"422": {

|

||||

"description": "Validation Error",

|

||||

"content": {

|

||||

"application/json": {

|

||||

"schema": { "$ref": "#/components/schemas/HTTPValidationError" }

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

},

|

||||

"/{app_id}/data": {

|

||||

"get": {

|

||||

"tags": ["Apps"],

|

||||

"summary": "Get Datasources Associated With App Id",

|

||||

"description": "Get all datasources for an app.",

|

||||

"operationId": "get_datasources_associated_with_app_id__app_id__data_get",

|

||||

"parameters": [

|

||||

{

|

||||

"name": "app_id",

|

||||

"in": "path",

|

||||

"required": true,

|

||||

"schema": { "type": "string", "title": "App Id" }

|

||||

}

|

||||

],

|

||||

"responses": {

|

||||

"200": {

|

||||

"description": "Successful Response",

|

||||

"content": { "application/json": { "schema": {} } }

|

||||

},

|

||||

"422": {

|

||||

"description": "Validation Error",

|

||||

"content": {

|

||||

"application/json": {

|

||||

"schema": { "$ref": "#/components/schemas/HTTPValidationError" }

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

},

|

||||

"/{app_id}/add": {

|

||||

"post": {

|

||||

"tags": ["Apps"],

|

||||

"summary": "Add Datasource To An App",

|

||||

"description": "Add a source to an existing app.",

|

||||

"operationId": "add_datasource_to_an_app__app_id__add_post",

|

||||

"parameters": [

|

||||

{

|

||||

"name": "app_id",

|

||||

"in": "path",

|

||||

"required": true,

|

||||

"schema": { "type": "string", "title": "App Id" }

|

||||

}

|

||||

],

|

||||

"requestBody": {

|

||||

"required": true,

|

||||

"content": {

|

||||

"application/json": {

|

||||

"schema": { "$ref": "#/components/schemas/SourceApp" }

|

||||

}

|

||||

}

|

||||

},

|

||||

"responses": {

|

||||

"200": {

|

||||

"description": "Successful Response",

|

||||

"content": {

|

||||

"application/json": {

|

||||

"schema": { "$ref": "#/components/schemas/DefaultResponse" }

|

||||

}

|

||||

}

|

||||

},

|

||||

"422": {

|

||||

"description": "Validation Error",

|

||||

"content": {

|

||||

"application/json": {

|

||||

"schema": { "$ref": "#/components/schemas/HTTPValidationError" }

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

},

|

||||

"/{app_id}/query": {

|

||||

"post": {

|

||||

"tags": ["Apps"],

|

||||

"summary": "Query An App",

|

||||

"description": "Query an existing app.",

|

||||

"operationId": "query_an_app__app_id__query_post",

|

||||

"parameters": [

|

||||

{

|

||||

"name": "app_id",

|

||||

"in": "path",

|

||||

"required": true,

|

||||

"schema": { "type": "string", "title": "App Id" }

|

||||

}

|

||||

],

|

||||

"requestBody": {

|

||||

"required": true,

|

||||

"content": {

|

||||

"application/json": {

|

||||

"schema": { "$ref": "#/components/schemas/QueryApp" }

|

||||

}

|

||||

}

|

||||

},

|

||||

"responses": {

|

||||

"200": {

|

||||

"description": "Successful Response",

|

||||

"content": {

|

||||

"application/json": {

|

||||

"schema": { "$ref": "#/components/schemas/DefaultResponse" }

|

||||

}

|

||||

}

|

||||

},

|

||||

"422": {

|

||||

"description": "Validation Error",

|

||||

"content": {

|

||||

"application/json": {

|

||||

"schema": { "$ref": "#/components/schemas/HTTPValidationError" }

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

},

|

||||

"/{app_id}/chat": {

|

||||

"post": {

|

||||

"tags": ["Apps"],

|

||||

"summary": "Chat With An App",

|

||||

"description": "Query an existing app.\n\napp_id: The ID of the app. Use \"default\" for the default app.\n\nmessage: The message that you want to send to the App.",

|

||||

"operationId": "chat_with_an_app__app_id__chat_post",

|

||||

"parameters": [

|

||||

{

|

||||

"name": "app_id",

|

||||

"in": "path",

|

||||

"required": true,

|

||||

"schema": { "type": "string", "title": "App Id" }

|

||||

}

|

||||

],

|

||||

"requestBody": {

|

||||

"required": true,

|

||||

"content": {

|

||||

"application/json": {

|

||||

"schema": { "$ref": "#/components/schemas/MessageApp" }

|

||||

}

|

||||

}

|

||||

},

|

||||

"responses": {

|

||||

"200": {

|

||||

"description": "Successful Response",

|

||||

"content": {

|

||||

"application/json": {

|

||||

"schema": { "$ref": "#/components/schemas/DefaultResponse" }

|

||||

}

|

||||

}

|

||||

},

|

||||

"422": {

|

||||

"description": "Validation Error",

|

||||

"content": {

|

||||

"application/json": {

|

||||

"schema": { "$ref": "#/components/schemas/HTTPValidationError" }

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

},

|

||||

"/{app_id}/deploy": {

|

||||

"post": {

|

||||

"tags": ["Apps"],

|

||||

"summary": "Deploy App",

|

||||

"description": "Deploy an existing app.",

|

||||

"operationId": "deploy_app__app_id__deploy_post",

|

||||

"parameters": [

|

||||

{

|

||||

"name": "app_id",

|

||||

"in": "path",

|

||||

"required": true,

|

||||

"schema": { "type": "string", "title": "App Id" }

|

||||

}

|

||||

],

|

||||

"requestBody": {

|

||||

"required": true,

|

||||

"content": {

|

||||

"application/json": {

|

||||

"schema": { "$ref": "#/components/schemas/DeployAppRequest" }

|

||||

}

|

||||

}

|

||||

},

|

||||

"responses": {

|

||||

"200": {

|

||||

"description": "Successful Response",

|

||||

"content": {

|

||||

"application/json": {

|

||||

"schema": { "$ref": "#/components/schemas/DefaultResponse" }

|

||||

}

|

||||

}

|

||||

},

|

||||

"422": {

|

||||

"description": "Validation Error",

|

||||

"content": {

|

||||

"application/json": {

|

||||

"schema": { "$ref": "#/components/schemas/HTTPValidationError" }

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

},

|

||||

"/{app_id}/delete": {

|

||||

"delete": {

|

||||

"tags": ["Apps"],

|

||||

"summary": "Delete App",

|

||||

"description": "Delete an existing app.",

|

||||

"operationId": "delete_app__app_id__delete_delete",

|

||||

"parameters": [

|

||||

{

|

||||

"name": "app_id",

|

||||

"in": "path",

|

||||

"required": true,

|

||||

"schema": { "type": "string", "title": "App Id" }

|

||||

}

|

||||

],

|

||||

"responses": {

|

||||

"200": {

|

||||

"description": "Successful Response",

|

||||

"content": {

|

||||

"application/json": {

|

||||

"schema": { "$ref": "#/components/schemas/DefaultResponse" }

|

||||

}

|

||||

}

|

||||

},

|

||||

"422": {

|

||||

"description": "Validation Error",

|

||||

"content": {

|

||||

"application/json": {

|

||||

"schema": { "$ref": "#/components/schemas/HTTPValidationError" }

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

},

|

||||

"components": {

|

||||

"schemas": {

|

||||

"Body_create_app_using_default_config_create_post": {

|

||||

"properties": {

|

||||

"config": { "type": "string", "format": "binary", "title": "Config" }

|

||||

},

|

||||

"type": "object",

|

||||

"title": "Body_create_app_using_default_config_create_post"

|

||||

},

|

||||

"DefaultResponse": {

|

||||

"properties": { "response": { "type": "string", "title": "Response" } },

|

||||

"type": "object",

|

||||

"required": ["response"],

|

||||

"title": "DefaultResponse"

|

||||

},

|

||||

"DeployAppRequest": {

|

||||

"properties": {

|

||||

"api_key": {

|

||||

"type": "string",

|

||||

"title": "Api Key",

|

||||

"description": "The Embedchain API key for App deployments.",

|

||||

"default": ""

|

||||

}

|

||||

},

|

||||

"type": "object",

|

||||

"title": "DeployAppRequest",

|

||||

"example":{

|

||||

"api_key":"ec-xxx"

|

||||

}

|

||||

},

|

||||

"HTTPValidationError": {

|

||||

"properties": {

|

||||

"detail": {

|

||||

"items": { "$ref": "#/components/schemas/ValidationError" },

|

||||

"type": "array",

|

||||

"title": "Detail"

|

||||

}

|

||||

},

|

||||

"type": "object",

|

||||

"title": "HTTPValidationError"

|

||||

},

|

||||

"MessageApp": {

|

||||

"properties": {

|

||||

"message": {

|

||||

"type": "string",

|

||||

"title": "Message",

|

||||

"description": "The message that you want to send to the App.",

|

||||

"default": ""

|

||||

}

|

||||

},

|

||||

"type": "object",

|

||||

"title": "MessageApp"

|

||||

},

|

||||

"QueryApp": {

|

||||

"properties": {

|

||||

"query": {

|

||||

"type": "string",

|

||||

"title": "Query",

|

||||

"description": "The query that you want to ask the App.",

|

||||

"default": ""

|

||||

}

|

||||

},

|

||||

"type": "object",

|

||||

"title": "QueryApp",

|

||||

"example":{

|

||||

"query":"Who is Elon Musk?"

|

||||

}

|

||||

},

|

||||

"SourceApp": {

|

||||

"properties": {

|

||||

"source": {

|

||||

"type": "string",

|

||||

"title": "Source",

|

||||

"description": "The source that you want to add to the App.",

|

||||

"default": ""

|

||||

},

|

||||

"data_type": {

|

||||

"anyOf": [{ "type": "string" }, { "type": "null" }],

|

||||

"title": "Data Type",

|

||||

"description": "The type of data to add, remove it if you want Embedchain to detect it automatically.",

|

||||

"default": ""

|

||||

}

|

||||

},

|

||||

"type": "object",

|

||||

"title": "SourceApp",

|

||||

"example":{

|

||||

"source":"https://en.wikipedia.org/wiki/Elon_Musk"

|

||||

}

|

||||

},

|

||||

"ValidationError": {

|

||||

"properties": {

|

||||

"loc": {

|

||||

"items": { "anyOf": [{ "type": "string" }, { "type": "integer" }] },

|

||||

"type": "array",

|

||||

"title": "Location"

|

||||

},

|

||||

"msg": { "type": "string", "title": "Message" },

|

||||

"type": { "type": "string", "title": "Error Type" }

|

||||

},

|

||||

"type": "object",

|

||||

"required": ["loc", "msg", "type"],

|

||||

"title": "ValidationError"

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

@@ -313,6 +313,15 @@ class Pipeline(EmbedChain):

|

||||

)

|

||||

self.connection.commit()

|

||||

|

||||

def get_data_sources(self):

|

||||

db_data = self.cursor.execute("SELECT * FROM data_sources WHERE pipeline_id = ?", (self.local_id,)).fetchall()

|

||||

|

||||

data_sources = []

|

||||

for data in db_data:

|

||||

data_sources.append({"data_type": data[2], "data_value": data[3], "metadata": data[4]})

|

||||

|

||||

return data_sources

|

||||

|

||||

def deploy(self):

|

||||

if self.client is None:

|

||||

self._init_client()

|

||||

@@ -348,7 +357,7 @@ class Pipeline(EmbedChain):

|

||||

with open(yaml_path, "r") as file:

|

||||

config_data = yaml.safe_load(file)

|

||||

|

||||

pipeline_config_data = config_data.get("pipeline", {}).get("config", {})

|

||||

pipeline_config_data = config_data.get("app", {}).get("config", {})

|

||||

db_config_data = config_data.get("vectordb", {})

|

||||

embedding_model_config_data = config_data.get("embedding_model", {})

|

||||

llm_config_data = config_data.get("llm", {})

|

||||

|

||||

4

examples/rest-api/.dockerignore

Normal file

4

examples/rest-api/.dockerignore

Normal file

@@ -0,0 +1,4 @@

|

||||

.env

|

||||

app.db

|

||||

configs/**.yaml

|

||||

db

|

||||

4

examples/rest-api/.gitignore

vendored

Normal file

4

examples/rest-api/.gitignore

vendored

Normal file

@@ -0,0 +1,4 @@

|

||||

.env

|

||||

app.db

|

||||

configs/**.yaml

|

||||

db

|

||||

15

examples/rest-api/Dockerfile

Normal file

15

examples/rest-api/Dockerfile

Normal file

@@ -0,0 +1,15 @@

|

||||

FROM python:3.11-slim

|

||||

|

||||

WORKDIR /app

|

||||

|

||||

COPY requirements.txt /app/

|

||||

|

||||

RUN pip install --no-cache-dir -r requirements.txt

|

||||

|

||||

COPY . /app

|

||||

|

||||

EXPOSE 8000

|

||||

|

||||

ENV NAME embedchain

|

||||

|

||||

CMD ["uvicorn", "main:app", "--host", "0.0.0.0", "--port", "8000"]

|

||||

21

examples/rest-api/README.md

Normal file

21

examples/rest-api/README.md

Normal file

@@ -0,0 +1,21 @@

|

||||

## Single command to rule them all,

|

||||

|

||||

```bash

|

||||

docker run -d --name embedchain -p 8000:8000 embedchain/app:rest-api-latest

|

||||

```

|

||||

|

||||

### To run the app locally,

|

||||

|

||||

```bash

|

||||

# will help reload on changes

|

||||

DEVELOPMENT=True && python -m main

|

||||

```

|

||||

|

||||

Using docker (locally),

|

||||

|

||||

```bash

|

||||

docker build -t embedchain/app:rest-api-latest .

|

||||

docker run -d --name embedchain -p 8000:8000 embedchain/app:rest-api-latest

|

||||

docker image push embedchain/app:rest-api-latest

|

||||

```

|

||||

|

||||

0

examples/rest-api/__init__.py

Normal file

0

examples/rest-api/__init__.py

Normal file

5

examples/rest-api/bruno/ec-rest-api/bruno.json

Normal file

5

examples/rest-api/bruno/ec-rest-api/bruno.json

Normal file

@@ -0,0 +1,5 @@

|

||||

{

|

||||

"version": "1",

|

||||

"name": "ec-rest-api",

|

||||

"type": "collection"

|

||||

}

|

||||

18

examples/rest-api/bruno/ec-rest-api/default_add.bru

Normal file

18

examples/rest-api/bruno/ec-rest-api/default_add.bru

Normal file

@@ -0,0 +1,18 @@

|

||||

meta {

|

||||

name: default_add

|

||||

type: http

|

||||

seq: 3

|

||||

}

|

||||

|

||||

post {

|

||||

url: http://localhost:8000/add

|

||||

body: json

|

||||

auth: none

|

||||

}

|

||||

|

||||

body:json {

|

||||

{

|

||||

"source": "source_url",

|

||||

"data_type": "data_type"

|

||||

}

|

||||

}

|

||||

17

examples/rest-api/bruno/ec-rest-api/default_chat.bru

Normal file

17

examples/rest-api/bruno/ec-rest-api/default_chat.bru

Normal file

@@ -0,0 +1,17 @@

|

||||

meta {

|

||||

name: default_chat

|

||||

type: http

|

||||

seq: 4

|

||||

}

|

||||

|

||||

post {

|

||||

url: http://localhost:8000/chat

|

||||

body: json

|

||||

auth: none

|

||||

}

|

||||

|

||||

body:json {

|

||||

{

|

||||

"message": "message"

|

||||

}

|

||||

}

|

||||

17

examples/rest-api/bruno/ec-rest-api/default_query.bru

Normal file

17

examples/rest-api/bruno/ec-rest-api/default_query.bru

Normal file

@@ -0,0 +1,17 @@

|

||||

meta {

|

||||

name: default_query

|

||||

type: http

|

||||

seq: 2

|

||||

}

|

||||

|

||||

post {

|

||||

url: http://localhost:8000/query

|

||||

body: json

|

||||

auth: none

|

||||

}

|

||||

|

||||

body:json {

|

||||

{

|

||||

"query": "Who is Elon Musk?"

|

||||

}

|

||||

}

|

||||

11

examples/rest-api/bruno/ec-rest-api/ping.bru

Normal file

11

examples/rest-api/bruno/ec-rest-api/ping.bru

Normal file

@@ -0,0 +1,11 @@

|

||||

meta {

|

||||

name: ping

|

||||

type: http

|

||||

seq: 1

|

||||

}

|

||||

|

||||

get {

|

||||

url: http://localhost:8000/ping

|

||||

body: json

|

||||

auth: none

|

||||

}

|

||||

3

examples/rest-api/configs/README.md

Normal file

3

examples/rest-api/configs/README.md

Normal file

@@ -0,0 +1,3 @@

|

||||

### Config directory

|

||||

|

||||

Here, all the YAML files will get stored.

|

||||

11

examples/rest-api/database.py

Normal file

11

examples/rest-api/database.py

Normal file

@@ -0,0 +1,11 @@

|

||||

from sqlalchemy import create_engine

|

||||

from sqlalchemy.ext.declarative import declarative_base

|

||||

from sqlalchemy.orm import sessionmaker

|

||||

|

||||

SQLALCHEMY_DATABASE_URI = "sqlite:///./app.db"

|

||||

|

||||

engine = create_engine(SQLALCHEMY_DATABASE_URI, connect_args={"check_same_thread": False})

|

||||

|

||||

SessionLocal = sessionmaker(autocommit=False, autoflush=False, bind=engine)

|

||||

|

||||

Base = declarative_base()

|

||||

17

examples/rest-api/default.yaml

Normal file

17

examples/rest-api/default.yaml

Normal file

@@ -0,0 +1,17 @@

|

||||

app:

|

||||

config:

|

||||

id: 'default'

|

||||

|

||||

llm:

|

||||

provider: gpt4all

|

||||

config:

|

||||

model: 'orca-mini-3b.ggmlv3.q4_0.bin'

|

||||

temperature: 0.5

|

||||

max_tokens: 1000

|

||||

top_p: 1

|

||||

stream: false

|

||||

|

||||

embedder:

|

||||

provider: gpt4all

|

||||

config:

|

||||

model: 'all-MiniLM-L6-v2'

|

||||

321

examples/rest-api/main.py

Normal file

321

examples/rest-api/main.py

Normal file

@@ -0,0 +1,321 @@

|

||||

import os

|

||||

import yaml

|

||||

from fastapi import FastAPI, UploadFile, Depends, HTTPException

|

||||

from sqlalchemy.orm import Session

|

||||

from embedchain import Pipeline as App

|

||||

from embedchain.client import Client

|

||||

from models import (

|

||||

QueryApp,

|

||||

SourceApp,

|

||||

DefaultResponse,

|

||||

DeployAppRequest,

|

||||

)

|

||||

from database import Base, engine, SessionLocal

|

||||

from services import get_app, save_app, get_apps, remove_app

|

||||

from utils import generate_error_message_for_api_keys

|

||||

|

||||

Base.metadata.create_all(bind=engine)

|

||||

|

||||

|

||||

def get_db():

|

||||

db = SessionLocal()

|

||||

try:

|

||||

yield db

|

||||

finally:

|

||||

db.close()

|

||||

|

||||

|

||||

app = FastAPI(

|

||||

title="Embedchain REST API",

|

||||

description="This is the REST API for Embedchain.",

|

||||

version="0.0.1",

|

||||

license_info={

|

||||

"name": "Apache 2.0",

|

||||

"url": "https://github.com/embedchain/embedchain/blob/main/LICENSE",

|

||||

},

|

||||

)

|

||||

|

||||

|

||||

@app.get("/ping", tags=["Utility"])

|

||||

def check_status():

|

||||

"""

|

||||

Endpoint to check the status of the API.

|

||||

"""

|

||||

return {"ping": "pong"}

|

||||

|

||||

|

||||

@app.get("/apps", tags=["Apps"])

|

||||

async def get_all_apps(db: Session = Depends(get_db)):

|

||||

"""

|

||||

Get all apps.

|

||||

"""

|

||||

apps = get_apps(db)

|

||||

return {"results": apps}

|

||||

|

||||

|

||||

@app.post("/create", tags=["Apps"], response_model=DefaultResponse)

|

||||

async def create_app_using_default_config(app_id: str, config: UploadFile = None, db: Session = Depends(get_db)):

|

||||

"""

|

||||

Create a new app using App ID.

|

||||

If you don't provide a config file, Embedchain will use the default config file\n

|

||||

which uses opensource GPT4ALL model.\n

|

||||

app_id: The ID of the app.\n

|

||||

config: The YAML config file to create an App.\n

|

||||

"""

|

||||

try:

|

||||

if app_id is None:

|

||||

raise HTTPException(detail="App ID not provided.", status_code=400)

|

||||

|

||||

if get_app(db, app_id) is not None:

|

||||

raise HTTPException(detail=f"App with id '{app_id}' already exists.", status_code=400)

|

||||

|

||||

yaml_path = "default.yaml"

|

||||

if config is not None:

|

||||

contents = await config.read()

|

||||

try:

|

||||

yaml.safe_load(contents)

|

||||

# TODO: validate the config yaml file here

|

||||

yaml_path = f"configs/{app_id}.yaml"

|

||||

with open(yaml_path, "w") as file:

|

||||

file.write(str(contents, "utf-8"))

|

||||

except yaml.YAMLError as exc:

|

||||

raise HTTPException(detail=f"Error parsing YAML: {exc}", status_code=400)

|

||||

|

||||

save_app(db, app_id, yaml_path)

|

||||

|

||||

return DefaultResponse(response=f"App created successfully. App ID: {app_id}")

|

||||

except Exception as e:

|

||||

raise HTTPException(detail=f"Error creating app: {e}", status_code=400)

|

||||

|

||||

|

||||

@app.get(

|

||||

"/{app_id}/data",

|

||||

tags=["Apps"],

|

||||

)

|

||||

async def get_datasources_associated_with_app_id(app_id: str, db: Session = Depends(get_db)):

|

||||

"""

|

||||

Get all datasources for an app.\n

|

||||

app_id: The ID of the app. Use "default" for the default app.\n

|

||||

"""

|

||||

try:

|

||||

if app_id is None:

|

||||

raise HTTPException(

|

||||

detail="App ID not provided. If you want to use the default app, use 'default' as the app_id.",

|

||||

status_code=400,

|

||||

)

|

||||

|

||||

db_app = get_app(db, app_id)

|

||||

|

||||

if db_app is None:

|

||||

raise HTTPException(detail=f"App with id {app_id} does not exist, please create it first.", status_code=400)

|

||||

|

||||

app = App.from_config(yaml_path=db_app.config)

|

||||

|

||||

response = app.get_data_sources()

|

||||

return {"results": response}

|

||||

except ValueError as ve:

|

||||

if "OPENAI_API_KEY" in str(ve) or "OPENAI_ORGANIZATION" in str(ve):

|

||||

raise HTTPException(

|

||||

detail=generate_error_message_for_api_keys(ve),

|

||||

status_code=400,

|

||||

)

|

||||

except Exception as e:

|

||||

raise HTTPException(detail=f"Error occurred: {e}", status_code=400)

|

||||

|

||||

|

||||

@app.post(

|

||||

"/{app_id}/add",

|

||||

tags=["Apps"],

|

||||

response_model=DefaultResponse,

|

||||

)

|

||||

async def add_datasource_to_an_app(body: SourceApp, app_id: str, db: Session = Depends(get_db)):

|

||||

"""

|

||||

Add a source to an existing app.\n

|

||||

app_id: The ID of the app. Use "default" for the default app.\n

|

||||

source: The source to add.\n

|

||||

data_type: The data type of the source. Remove it if you want Embedchain to detect it automatically.\n

|

||||

"""

|

||||

try:

|

||||

if app_id is None:

|

||||

raise HTTPException(

|

||||

detail="App ID not provided. If you want to use the default app, use 'default' as the app_id.",

|

||||

status_code=400,

|

||||

)

|

||||

|

||||

db_app = get_app(db, app_id)

|

||||

|

||||

if db_app is None:

|

||||

raise HTTPException(detail=f"App with id {app_id} does not exist, please create it first.", status_code=400)

|

||||

|

||||

app = App.from_config(yaml_path=db_app.config)

|

||||

|

||||

response = app.add(source=body.source, data_type=body.data_type)

|

||||

return DefaultResponse(response=response)

|

||||

except ValueError as ve:

|

||||

if "OPENAI_API_KEY" in str(ve) or "OPENAI_ORGANIZATION" in str(ve):

|

||||

raise HTTPException(

|

||||

detail=generate_error_message_for_api_keys(ve),

|

||||

status_code=400,

|

||||

)

|

||||

except Exception as e:

|

||||

raise HTTPException(detail=f"Error occurred: {e}", status_code=400)

|

||||

|

||||

|

||||

@app.post(

|

||||

"/{app_id}/query",

|

||||

tags=["Apps"],

|

||||

response_model=DefaultResponse,

|

||||

)

|

||||

async def query_an_app(body: QueryApp, app_id: str, db: Session = Depends(get_db)):

|

||||

"""

|

||||

Query an existing app.\n

|

||||

app_id: The ID of the app. Use "default" for the default app.\n

|

||||

query: The query that you want to ask the App.\n

|

||||

"""

|

||||

try:

|

||||

if app_id is None:

|

||||

raise HTTPException(

|

||||

detail="App ID not provided. If you want to use the default app, use 'default' as the app_id.",

|

||||

status_code=400,

|

||||

)

|

||||

|

||||

db_app = get_app(db, app_id)

|

||||

|

||||

if db_app is None:

|

||||

raise HTTPException(detail=f"App with id {app_id} does not exist, please create it first.", status_code=400)

|

||||

|

||||

app = App.from_config(yaml_path=db_app.config)

|

||||

|

||||

response = app.query(body.query)

|

||||

return DefaultResponse(response=response)

|

||||

except ValueError as ve:

|

||||

if "OPENAI_API_KEY" in str(ve) or "OPENAI_ORGANIZATION" in str(ve):

|

||||

raise HTTPException(

|

||||

detail=generate_error_message_for_api_keys(ve),

|

||||

status_code=400,

|

||||

)

|

||||

except Exception as e:

|

||||

raise HTTPException(detail=f"Error occurred: {e}", status_code=400)

|

||||

|

||||

|

||||

# FIXME: The chat implementation of Embedchain needs to be modified to work with the REST API.

|

||||

# @app.post(

|

||||

# "/{app_id}/chat",

|

||||

# tags=["Apps"],

|

||||

# response_model=DefaultResponse,

|

||||

# )

|

||||

# async def chat_with_an_app(body: MessageApp, app_id: str, db: Session = Depends(get_db)):

|

||||

# """

|

||||

# Query an existing app.\n

|

||||

# app_id: The ID of the app. Use "default" for the default app.\n

|

||||

# message: The message that you want to send to the App.\n

|

||||

# """

|

||||

# try:

|

||||

# if app_id is None:

|

||||

# raise HTTPException(

|

||||

# detail="App ID not provided. If you want to use the default app, use 'default' as the app_id.",

|

||||

# status_code=400,

|

||||

# )

|

||||

|

||||

# db_app = get_app(db, app_id)

|

||||

|

||||

# if db_app is None:

|

||||

# raise HTTPException(

|

||||

# detail=f"App with id {app_id} does not exist, please create it first.",

|

||||

# status_code=400

|

||||

# )

|

||||

|

||||

# app = App.from_config(yaml_path=db_app.config)

|

||||

|

||||

# response = app.chat(body.message)

|

||||

# return DefaultResponse(response=response)

|

||||

# except ValueError as ve:

|

||||

# if "OPENAI_API_KEY" in str(ve) or "OPENAI_ORGANIZATION" in str(ve):

|

||||

# raise HTTPException(

|

||||

# detail=generate_error_message_for_api_keys(ve),

|

||||

# status_code=400,

|

||||

# )

|

||||

# except Exception as e:

|

||||

# raise HTTPException(detail=f"Error occurred: {e}", status_code=400)

|

||||

|

||||

|

||||

@app.post(

|

||||

"/{app_id}/deploy",

|

||||

tags=["Apps"],

|

||||

response_model=DefaultResponse,

|

||||

)

|

||||

async def deploy_app(body: DeployAppRequest, app_id: str, db: Session = Depends(get_db)):

|

||||

"""

|

||||

Query an existing app.\n

|

||||

app_id: The ID of the app. Use "default" for the default app.\n

|

||||

api_key: The API key to use for deployment. If not provided,

|

||||

Embedchain will use the API key previously used (if any).\n

|

||||

"""

|

||||

try:

|

||||

if app_id is None:

|

||||

raise HTTPException(

|

||||

detail="App ID not provided. If you want to use the default app, use 'default' as the app_id.",

|

||||

status_code=400,

|

||||

)

|

||||

|

||||

db_app = get_app(db, app_id)

|

||||

|

||||

if db_app is None:

|

||||

raise HTTPException(detail=f"App with id {app_id} does not exist, please create it first.", status_code=400)

|

||||

|

||||

app = App.from_config(yaml_path=db_app.config)

|

||||

|

||||

api_key = body.api_key

|

||||

# this will save the api key in the embedchain.db

|

||||

Client(api_key=api_key)

|

||||

|

||||

app.deploy()

|

||||

return DefaultResponse(response="App deployed successfully.")

|

||||

except ValueError as ve:

|

||||

if "OPENAI_API_KEY" in str(ve) or "OPENAI_ORGANIZATION" in str(ve):

|

||||

raise HTTPException(

|

||||

detail=generate_error_message_for_api_keys(ve),

|

||||

status_code=400,

|

||||

)

|

||||

except Exception as e:

|

||||

raise HTTPException(detail=f"Error occurred: {e}", status_code=400)

|

||||

|

||||

|

||||

@app.delete(

|

||||

"/{app_id}/delete",

|

||||

tags=["Apps"],

|

||||

response_model=DefaultResponse,

|

||||

)

|

||||

async def delete_app(app_id: str, db: Session = Depends(get_db)):

|

||||

"""

|

||||

Delete an existing app.\n

|

||||

app_id: The ID of the app to be deleted.

|

||||

"""

|

||||

try:

|

||||

if app_id is None:

|

||||

raise HTTPException(

|

||||

detail="App ID not provided. If you want to use the default app, use 'default' as the app_id.",

|

||||

status_code=400,

|

||||

)

|

||||

|

||||

db_app = get_app(db, app_id)

|

||||

|

||||

if db_app is None:

|

||||

raise HTTPException(detail=f"App with id {app_id} does not exist, please create it first.", status_code=400)

|

||||

|

||||

app = App.from_config(yaml_path=db_app.config)

|

||||

|

||||

# reset app.db

|

||||

app.db.reset()

|

||||

|

||||

remove_app(db, app_id)

|

||||

return DefaultResponse(response=f"App with id {app_id} deleted successfully.")

|

||||

except Exception as e:

|

||||

raise HTTPException(detail=f"Error occurred: {e}", status_code=400)

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

import uvicorn

|

||||

|

||||

is_dev = os.getenv("DEVELOPMENT", "False")

|

||||

uvicorn.run("main:app", host="0.0.0.0", port=8000, reload=bool(is_dev))

|

||||

45

examples/rest-api/models.py

Normal file

45

examples/rest-api/models.py

Normal file

@@ -0,0 +1,45 @@

|

||||

from typing import Optional

|

||||

from pydantic import BaseModel, Field

|

||||

from sqlalchemy import Column, String, Integer

|

||||

from database import Base

|

||||

|

||||

|

||||

class QueryApp(BaseModel):

|

||||

query: str = Field("", description="The query that you want to ask the App.")

|

||||

|

||||

model_config = {

|

||||

"json_schema_extra": {

|

||||

"example": {

|

||||

"query": "Who is Elon Musk?",

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

|

||||

class SourceApp(BaseModel):

|

||||

source: str = Field("", description="The source that you want to add to the App.")

|

||||

data_type: Optional[str] = Field("", description="The type of data to add, remove it for autosense.")

|

||||

|

||||

model_config = {"json_schema_extra": {"example": {"source": "https://en.wikipedia.org/wiki/Elon_Musk"}}}

|

||||

|

||||

|

||||

class DeployAppRequest(BaseModel):

|

||||

api_key: str = Field("", description="The Embedchain API key for App deployments.")

|

||||

|

||||

model_config = {"json_schema_extra": {"example": {"api_key": "ec-xxx"}}}

|

||||

|

||||

|

||||

class MessageApp(BaseModel):

|

||||

message: str = Field("", description="The message that you want to send to the App.")

|

||||

|

||||

|

||||

class DefaultResponse(BaseModel):

|

||||

response: str

|

||||

|

||||

|

||||

class AppModel(Base):

|

||||

__tablename__ = "apps"

|

||||

|

||||

id = Column(Integer, primary_key=True, index=True)

|

||||

app_id = Column(String, unique=True, index=True)

|

||||

config = Column(String, unique=True, index=True)

|

||||

5

examples/rest-api/requirements.txt

Normal file

5

examples/rest-api/requirements.txt

Normal file

@@ -0,0 +1,5 @@

|

||||

fastapi==0.104.0

|

||||

uvicorn==0.23.2

|

||||

embedchain==0.0.86

|

||||

embedchain[dataloaders]==0.0.86

|

||||

sqlalchemy==2.0.22

|

||||

33

examples/rest-api/sample-config.yaml

Normal file

33

examples/rest-api/sample-config.yaml

Normal file

@@ -0,0 +1,33 @@

|

||||

app:

|

||||

config:

|

||||

id: 'default-app'

|

||||

|

||||

llm:

|

||||

provider: openai

|

||||

config:

|

||||

model: 'gpt-3.5-turbo'

|

||||

temperature: 0.5

|

||||

max_tokens: 1000

|

||||

top_p: 1

|

||||

stream: false

|

||||

template: |

|

||||

Use the following pieces of context to answer the query at the end.

|

||||

If you don't know the answer, just say that you don't know, don't try to make up an answer.

|

||||

|

||||

$context

|

||||

|

||||

Query: $query

|

||||

|

||||

Helpful Answer:

|

||||

|

||||

vectordb:

|

||||

provider: chroma

|

||||

config:

|

||||

collection_name: 'rest-api-app'

|

||||

dir: db

|

||||

allow_reset: true

|

||||

|

||||

embedder:

|

||||

provider: openai

|

||||

config:

|

||||

model: 'text-embedding-ada-002'

|

||||

26

examples/rest-api/services.py

Normal file

26

examples/rest-api/services.py

Normal file

@@ -0,0 +1,26 @@

|

||||

from sqlalchemy.orm import Session

|

||||

|

||||

from models import AppModel

|

||||

|

||||

|

||||

def get_app(db: Session, app_id: str):

|

||||

return db.query(AppModel).filter(AppModel.app_id == app_id).first()

|

||||

|

||||

|

||||

def get_apps(db: Session, skip: int = 0, limit: int = 100):

|

||||

return db.query(AppModel).offset(skip).limit(limit).all()

|

||||

|

||||

|

||||

def save_app(db: Session, app_id: str, config: str):

|

||||

db_app = AppModel(app_id=app_id, config=config)

|

||||

db.add(db_app)

|

||||

db.commit()

|

||||

db.refresh(db_app)

|

||||

return db_app

|

||||

|

||||

|

||||

def remove_app(db: Session, app_id: str):

|

||||

db_app = db.query(AppModel).filter(AppModel.app_id == app_id).first()

|

||||

db.delete(db_app)

|

||||

db.commit()

|

||||

return db_app

|

||||

21

examples/rest-api/utils.py

Normal file

21

examples/rest-api/utils.py

Normal file

@@ -0,0 +1,21 @@

|

||||

def generate_error_message_for_api_keys(error: ValueError) -> str:

|

||||

env_mapping = {

|

||||

"OPENAI_API_KEY": "OPENAI_API_KEY",

|

||||

"OPENAI_API_TYPE": "OPENAI_API_TYPE",

|

||||

"OPENAI_API_BASE": "OPENAI_API_BASE",

|

||||

"OPENAI_API_VERSION": "OPENAI_API_VERSION",

|

||||

"COHERE_API_KEY": "COHERE_API_KEY",

|

||||

"ANTHROPIC_API_KEY": "ANTHROPIC_API_KEY",

|

||||

"JINACHAT_API_KEY": "JINACHAT_API_KEY",

|

||||

"HUGGINGFACE_ACCESS_TOKEN": "HUGGINGFACE_ACCESS_TOKEN",

|

||||

"REPLICATE_API_TOKEN": "REPLICATE_API_TOKEN",

|

||||

}

|

||||

|

||||

missing_keys = [env_mapping[key] for key in env_mapping if key in str(error)]

|

||||

if missing_keys:

|

||||

missing_keys_str = ", ".join(missing_keys)

|

||||

return f"""Please set the {missing_keys_str} environment variable(s) when running the Docker container.

|

||||

Example: `docker run -e {missing_keys[0]}=xxx embedchain/app:rest-api-latest`

|

||||

"""

|

||||

else:

|

||||

return "Unknown error occurred."

|

||||

Reference in New Issue

Block a user