[new] streamlit deployment (#1034)

This commit is contained in:

62

docs/deployment/streamlit_io.mdx

Normal file

62

docs/deployment/streamlit_io.mdx

Normal file

@@ -0,0 +1,62 @@

|

||||

---

|

||||

title: 'Streamlit.io'

|

||||

description: 'Deploy your RAG application to streamlit.io platform'

|

||||

---

|

||||

|

||||

Embedchain offers a Streamlit template to facilitate the development of RAG chatbot applications in just three easy steps.

|

||||

|

||||

Follow the instructions given below to deploy your first application quickly:

|

||||

|

||||

## Step-1: Create RAG app

|

||||

|

||||

We provide a command line utility called `ec` in embedchain that inherits the template for `streamlit.io` platform and help you deploy the app. Follow the instructions to create a streamlit.io app using the template provided:

|

||||

|

||||

```bash Install embedchain

|

||||

pip install embedchain

|

||||

```

|

||||

|

||||

```bash Create application

|

||||

mkdir my-rag-app

|

||||

ec create --template=streamlit.io

|

||||

```

|

||||

|

||||

This will generate a directory structure like this:

|

||||

|

||||

```bash

|

||||

├── .streamlit

|

||||

│ └── secrets.toml

|

||||

├── app.py

|

||||

├── embedchain.json

|

||||

└── requirements.txt

|

||||

```

|

||||

|

||||

Feel free to edit the files as required.

|

||||

- `app.py`: Contains API app code

|

||||

- `.streamlit/secrets.toml`: Contains secrets for your application

|

||||

- `embedchain.json`: Contains embedchain specific configuration for deployment (you don't need to configure this)

|

||||

- `requirements.txt`: Contains python dependencies for your application

|

||||

|

||||

Add your `OPENAI_API_KEY` in `.streamlit/secrets.toml` file to run and deploy the app.

|

||||

|

||||

## Step-2: Test app locally

|

||||

|

||||

You can run the app locally by simply doing:

|

||||

|

||||

```bash Run locally

|

||||

pip install -r requirements.txt

|

||||

ec dev

|

||||

```

|

||||

|

||||

## Step-3: Deploy to streamlit.io

|

||||

|

||||

|

||||

|

||||

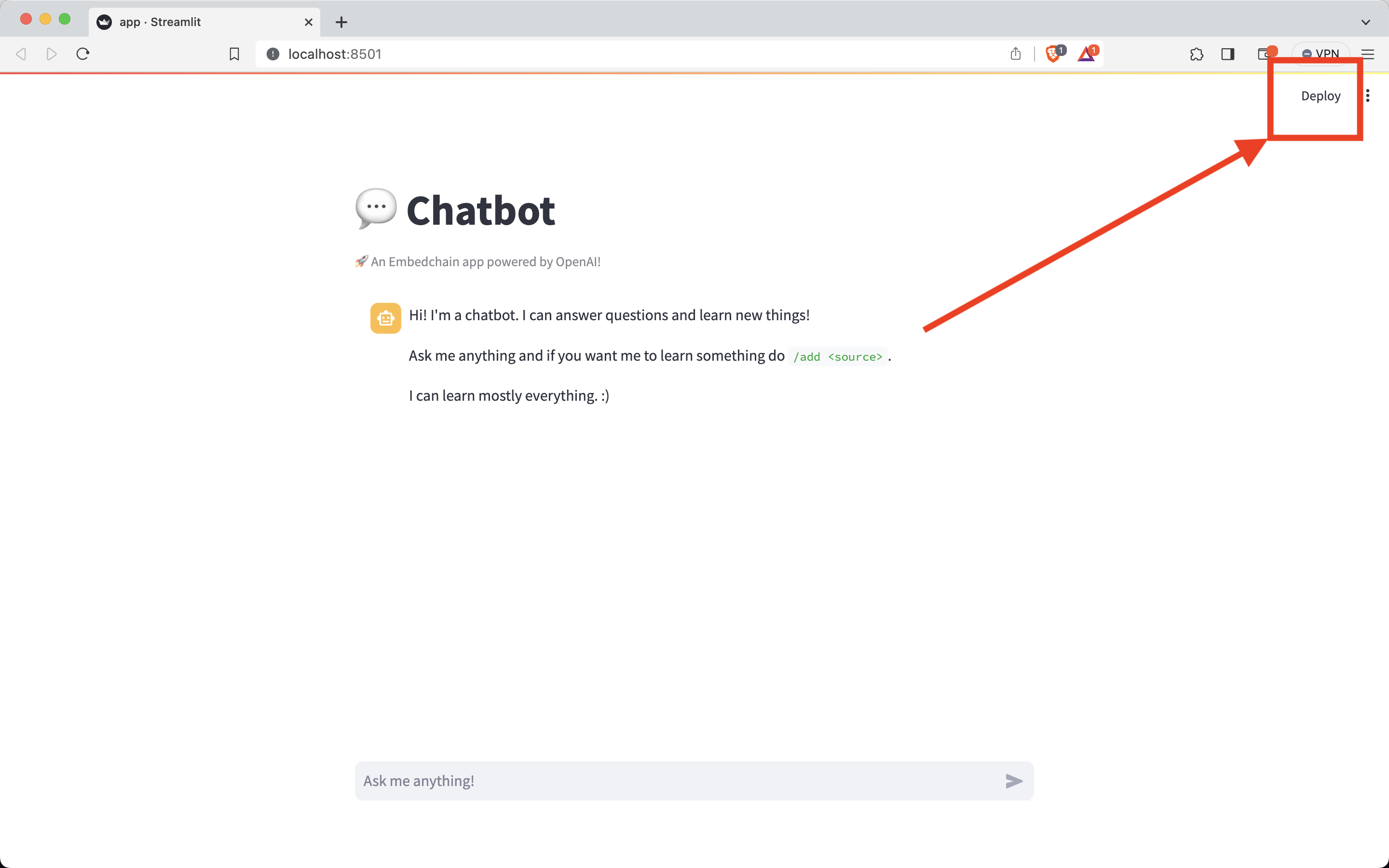

Use the deploy button from the streamlit website to deploy your app.

|

||||

|

||||

You can refer this [guide](https://docs.streamlit.io/streamlit-community-cloud/deploy-your-app) if you run into any problems.

|

||||

|

||||

## Seeking help?

|

||||

|

||||

If you run into issues with deployment, please feel free to reach out to us via any of the following methods:

|

||||

|

||||

<Snippet file="get-help.mdx" />

|

||||

@@ -9,6 +9,7 @@ After successfully setting up and testing your RAG app locally, the next step is

|

||||

<Card title="Fly.io" href="/deployment/fly_io"></Card>

|

||||

<Card title="Modal.com" href="/deployment/modal_com"></Card>

|

||||

<Card title="Render.com" href="/deployment/render_com"></Card>

|

||||

<Card title="Streamlit.io" href="/deployment/streamlit_io"></Card>

|

||||

<Card title="Embedchain Platform" href="#option-1-deploy-on-embedchain-platform"></Card>

|

||||

<Card title="Self-hosting" href="#option-2-self-hosting"></Card>

|

||||

</CardGroup>

|

||||

|

||||

@@ -89,7 +89,8 @@

|

||||

"get-started/deployment",

|

||||

"deployment/fly_io",

|

||||

"deployment/modal_com",

|

||||

"deployment/render_com"

|

||||

"deployment/render_com",

|

||||

"deployment/streamlit_io"

|

||||

]

|

||||

},

|

||||

{

|

||||

|

||||

@@ -98,6 +98,11 @@ def setup_render_com_app():

|

||||

)

|

||||

|

||||

|

||||

def setup_streamlit_io_app():

|

||||

# nothing needs to be done here

|

||||

console.print("Great! Now you can install the dependencies by doing `pip install -r requirements.txt`")

|

||||

|

||||

|

||||

@cli.command()

|

||||

@click.option("--template", default="fly.io", help="The template to use.")

|

||||

@click.argument("extra_args", nargs=-1, type=click.UNPROCESSED)

|

||||

@@ -113,6 +118,8 @@ def create(template, extra_args):

|

||||

setup_modal_com_app(extra_args)

|

||||

elif template == "render.com":

|

||||

setup_render_com_app()

|

||||

elif template == "streamlit.io":

|

||||

setup_streamlit_io_app()

|

||||

else:

|

||||

raise ValueError(f"Unknown template '{template}'.")

|

||||

|

||||

@@ -152,6 +159,16 @@ def run_dev_modal_com():

|

||||

console.print("\n🛑 [bold yellow]FastAPI server stopped[/bold yellow]")

|

||||

|

||||

|

||||

def run_dev_streamlit_io():

|

||||

streamlit_run_cmd = ["streamlit", "run", "app.py"]

|

||||

try:

|

||||

console.print(f"🚀 [bold cyan]Running Streamlit app with command: {' '.join(streamlit_run_cmd)}[/bold cyan]")

|

||||

subprocess.run(streamlit_run_cmd, check=True)

|

||||

except subprocess.CalledProcessError as e:

|

||||

console.print(f"❌ [bold red]An error occurred: {e}[/bold red]")

|

||||

except KeyboardInterrupt:

|

||||

console.print("\n🛑 [bold yellow]Streamlit server stopped[/bold yellow]")

|

||||

|

||||

def run_dev_render_com(debug, host, port):

|

||||

uvicorn_command = ["uvicorn", "app:app"]

|

||||

|

||||

@@ -186,6 +203,8 @@ def dev(debug, host, port):

|

||||

run_dev_modal_com()

|

||||

elif template == "render.com":

|

||||

run_dev_render_com(debug, host, port)

|

||||

elif template == "streamlit.io":

|

||||

run_dev_streamlit_io()

|

||||

else:

|

||||

raise ValueError(f"Unknown template '{template}'.")

|

||||

|

||||

@@ -260,6 +279,26 @@ def deploy_modal():

|

||||

)

|

||||

|

||||

|

||||

def deploy_streamlit():

|

||||

streamlit_deploy_cmd = ["streamlit", "run", "app.py"]

|

||||

try:

|

||||

console.print(f"🚀 [bold cyan]Running: {' '.join(streamlit_deploy_cmd)}[/bold cyan]")

|

||||

console.print(

|

||||

"""\n\n✅ [bold yellow]To deploy a streamlit app, you can directly it from the UI.\n

|

||||

Click on the 'Deploy' button on the top right corner of the app.\n

|

||||

For more information, please refer to https://docs.embedchain.ai/deployment/streamlit_io

|

||||

[/bold yellow]

|

||||

\n\n"""

|

||||

)

|

||||

subprocess.run(streamlit_deploy_cmd, check=True)

|

||||

except subprocess.CalledProcessError as e:

|

||||

console.print(f"❌ [bold red]An error occurred: {e}[/bold red]")

|

||||

except FileNotFoundError:

|

||||

console.print(

|

||||

"""❌ [bold red]'streamlit' command not found.\n

|

||||

Please ensure Streamlit CLI is installed and in your PATH.[/bold red]"""

|

||||

)

|

||||

|

||||

def deploy_render():

|

||||

render_deploy_cmd = ["render", "blueprint", "launch"]

|

||||

|

||||

@@ -290,5 +329,7 @@ def deploy():

|

||||

deploy_modal()

|

||||

elif template == "render.com":

|

||||

deploy_render()

|

||||

elif template == "streamlit.io":

|

||||

deploy_streamlit()

|

||||

else:

|

||||

console.print("❌ [bold red]No recognized deployment platform found.[/bold red]")

|

||||

|

||||

@@ -0,0 +1 @@

|

||||

OPENAI_API_KEY="sk-xxx"

|

||||

59

embedchain/deployment/streamlit.io/app.py

Normal file

59

embedchain/deployment/streamlit.io/app.py

Normal file

@@ -0,0 +1,59 @@

|

||||

import streamlit as st

|

||||

|

||||

from embedchain import Pipeline as App

|

||||

|

||||

|

||||

@st.cache_resource

|

||||

def embedchain_bot():

|

||||

return App()

|

||||

|

||||

|

||||

st.title("💬 Chatbot")

|

||||

st.caption("🚀 An Embedchain app powered by OpenAI!")

|

||||

if "messages" not in st.session_state:

|

||||

st.session_state.messages = [

|

||||

{

|

||||

"role": "assistant",

|

||||

"content": """

|

||||

Hi! I'm a chatbot. I can answer questions and learn new things!\n

|

||||

Ask me anything and if you want me to learn something do `/add <source>`.\n

|

||||

I can learn mostly everything. :)

|

||||

""",

|

||||

}

|

||||

]

|

||||

|

||||

for message in st.session_state.messages:

|

||||

with st.chat_message(message["role"]):

|

||||

st.markdown(message["content"])

|

||||

|

||||

if prompt := st.chat_input("Ask me anything!"):

|

||||

app = embedchain_bot()

|

||||

|

||||

if prompt.startswith("/add"):

|

||||

with st.chat_message("user"):

|

||||

st.markdown(prompt)

|

||||

st.session_state.messages.append({"role": "user", "content": prompt})

|

||||

prompt = prompt.replace("/add", "").strip()

|

||||

with st.chat_message("assistant"):

|

||||

message_placeholder = st.empty()

|

||||

message_placeholder.markdown("Adding to knowledge base...")

|

||||

app.add(prompt)

|

||||

message_placeholder.markdown(f"Added {prompt} to knowledge base!")

|

||||

st.session_state.messages.append({"role": "assistant", "content": f"Added {prompt} to knowledge base!"})

|

||||

st.stop()

|

||||

|

||||

with st.chat_message("user"):

|

||||

st.markdown(prompt)

|

||||

st.session_state.messages.append({"role": "user", "content": prompt})

|

||||

|

||||

with st.chat_message("assistant"):

|

||||

msg_placeholder = st.empty()

|

||||

msg_placeholder.markdown("Thinking...")

|

||||

full_response = ""

|

||||

|

||||

for response in app.chat(prompt):

|

||||

msg_placeholder.empty()

|

||||

full_response += response

|

||||

|

||||

msg_placeholder.markdown(full_response)

|

||||

st.session_state.messages.append({"role": "assistant", "content": full_response})

|

||||

2

embedchain/deployment/streamlit.io/requirements.txt

Normal file

2

embedchain/deployment/streamlit.io/requirements.txt

Normal file

@@ -0,0 +1,2 @@

|

||||

streamlit==1.29.0

|

||||

embedchain

|

||||

Reference in New Issue

Block a user