|

|

|

|

@@ -1,102 +0,0 @@

|

|

|

|

|

---

|

|

|

|

|

title: "🌍 Getting Started"

|

|

|

|

|

---

|

|

|

|

|

|

|

|

|

|

## Quickstart

|

|

|

|

|

|

|

|

|

|

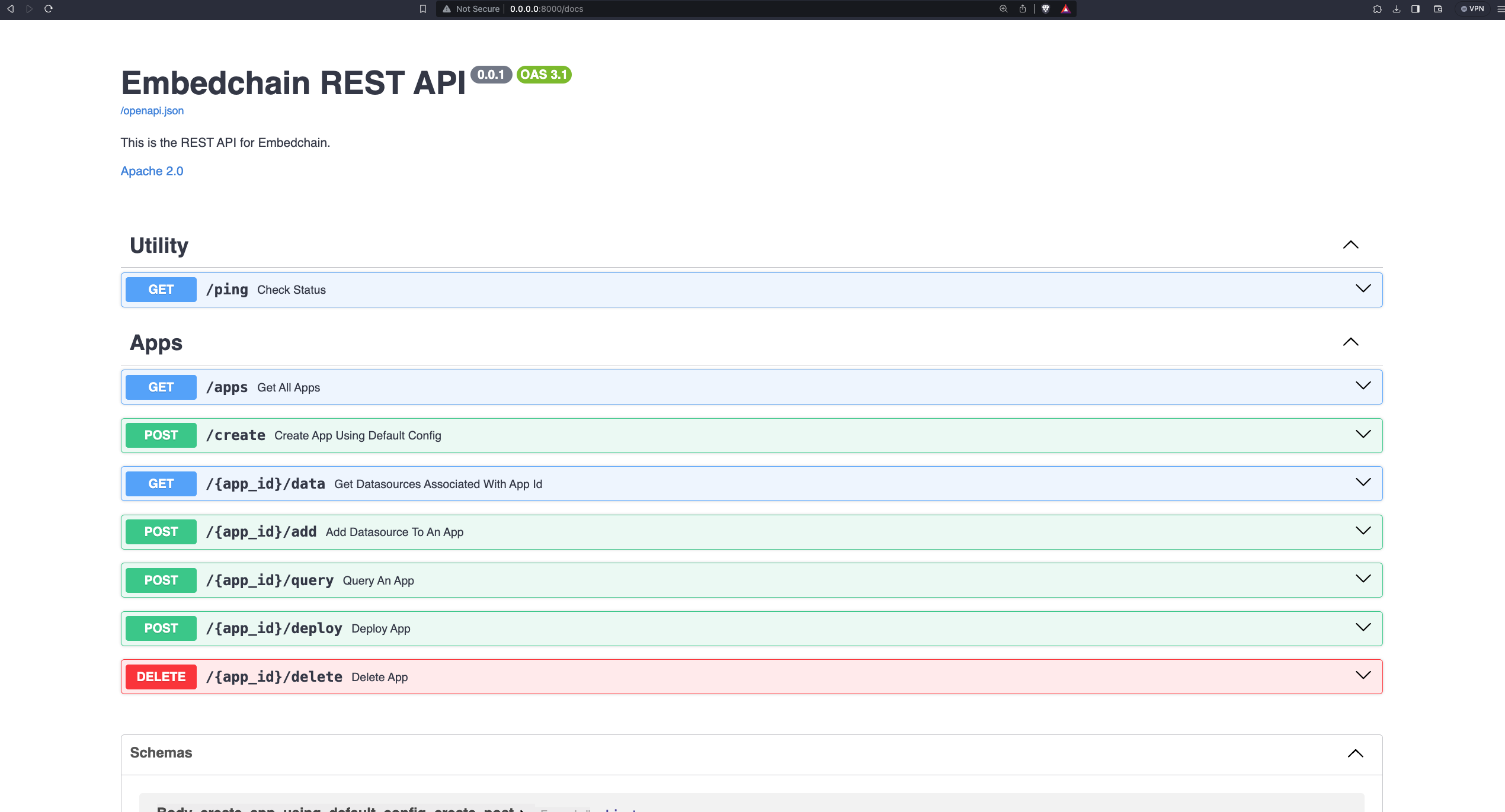

To run Embedchain as a REST API server use,

|

|

|

|

|

|

|

|

|

|

```bash

|

|

|

|

|

docker run -d --name embedchain -p 8080:8080 embedchain/rest-api:latest

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

Open up your browser and navigate to http://0.0.0.0:8080/docs to interact with the API. There is a full-fledged Swagger docs playground with all the information

|

|

|

|

|

about the API endpoints.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

## Creating your first App

|

|

|

|

|

|

|

|

|

|

App requires an `app_id` to be created. The `app_id` is a unique identifier for your app.

|

|

|

|

|

|

|

|

|

|

By default we will use the opensource **gpt4all** model to perform operations. You can also specify your own config by uploading a config YAML file.

|

|

|

|

|

|

|

|

|

|

For example, create a `config.yaml` file (adjust according to your requirements):

|

|

|

|

|

|

|

|

|

|

```yaml

|

|

|

|

|

app:

|

|

|

|

|

config:

|

|

|

|

|

id: "default-app"

|

|

|

|

|

|

|

|

|

|

llm:

|

|

|

|

|

provider: openai

|

|

|

|

|

config:

|

|

|

|

|

model: "gpt-3.5-turbo"

|

|

|

|

|

temperature: 0.5

|

|

|

|

|

max_tokens: 1000

|

|

|

|

|

top_p: 1

|

|

|

|

|

stream: false

|

|

|

|

|

template: |

|

|

|

|

|

Use the following pieces of context to answer the query at the end.

|

|

|

|

|

If you don't know the answer, just say that you don't know, don't try to make up an answer.

|

|

|

|

|

|

|

|

|

|

$context

|

|

|

|

|

|

|

|

|

|

Query: $query

|

|

|

|

|

|

|

|

|

|

Helpful Answer:

|

|

|

|

|

|

|

|

|

|

vectordb:

|

|

|

|

|

provider: chroma

|

|

|

|

|

config:

|

|

|

|

|

collection_name: "rest-api-app"

|

|

|

|

|

dir: db

|

|

|

|

|

allow_reset: true

|

|

|

|

|

|

|

|

|

|

embedder:

|

|

|

|

|

provider: openai

|

|

|

|

|

config:

|

|

|

|

|

model: "text-embedding-ada-002"

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

To learn more about custom configurations, check out the [Custom configurations](https://docs.embedchain.ai/advanced/configuration).

|

|

|

|

|

To explore more examples of config YAMLs for Embedchain, visit [embedchain/configs](https://github.com/embedchain/embedchain/tree/main/configs).

|

|

|

|

|

|

|

|

|

|

Now, you can upload this config file in the request body.

|

|

|

|

|

|

|

|

|

|

**Note:** To use custom models, an **API key** might be required. Refer to the table below to determine the necessary API key for your provider.

|

|

|

|

|

|

|

|

|

|

| Keys | Providers |

|

|

|

|

|

| -------------------------- | ------------------------------ |

|

|

|

|

|

| `OPENAI_API_KEY ` | OpenAI, Azure OpenAI, Jina etc |

|

|

|

|

|

| `OPENAI_API_TYPE` | Azure OpenAI |

|

|

|

|

|

| `OPENAI_API_BASE` | Azure OpenAI |

|

|

|

|

|

| `OPENAI_API_VERSION` | Azure OpenAI |

|

|

|

|

|

| `COHERE_API_KEY` | Cohere |

|

|

|

|

|

| `ANTHROPIC_API_KEY` | Anthropic |

|

|

|

|

|

| `JINACHAT_API_KEY` | Jina |

|

|

|

|

|

| `HUGGINGFACE_ACCESS_TOKEN` | Huggingface |

|

|

|

|

|

| `REPLICATE_API_TOKEN` | LLAMA2 |

|

|

|

|

|

|

|

|

|

|

To provide them, you can simply run the docker command with the `-e` flag.

|

|

|

|

|

|

|

|

|

|

For example,

|

|

|

|

|

|

|

|

|

|

```bash

|

|

|

|

|

docker run -d --name embedchain -p 8080:8080 -e OPENAI_API_KEY=YOUR_API_KEY embedchain/rest-api:latest

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

Cool! This will create a new Embedchain App with the given `app_id`.

|

|

|

|

|

|

|

|

|

|

## Deploying your App to Embedchain Platform

|

|

|

|

|

|

|

|

|

|

This feature is very powerful as it allows the creation of a public API endpoint for your app, enabling queries from anywhere. This creates a _pipeline_

|

|

|

|

|

for your app that can sync the data time to time and provide you with the best results.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

To utilize this functionality, visit [app.embedchain.ai](app.embedchain.ai) and create an account. Subsequently, generate a new [API KEY](https://app.embedchain.ai/settings/keys/).

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Using this API key, you can deploy your app to the platform.

|